Arvind Narayanan

Princeton University; Knight Institute Visiting Senior Research Scientist 2022-2023

Arvind Narayanan is a professor of computer science at Princeton University and the director of the Center for Information Technology Policy. He is a co-author of the book AI Snake Oil and a newsletter of the same name which is read by 50,000 researchers, policy makers, journalists, and AI enthusiasts. He previously co-authored two widely used computer science textbooks: Bitcoin and Cryptocurrency Technologies and Fairness in Machine Learning. Narayanan led the Princeton Web Transparency and Accountability Project to uncover how companies collect and use our personal information. His work was among the first to show how machine learning reflects cultural stereotypes. Narayanan was one of TIME's inaugural list of 100 most influential people in AI. He is a recipient of the Presidential Early Career Award for Scientists and Engineers (PECASE).

Narayanan was the Knight First Amendment Institute’s 2022-2023 visiting senior research scientist. He carried out a research project on algorithmic amplification on social media and hosted a major conference on the topic in Spring 2023.

Selected Projects

-

Algorithmic Amplification and Society

A project studying algorithmic amplification and distortion, and exploring ways to minimize harmful amplifying or distorting effects

Selected Projects

-

Algorithmic Amplification and Society

A project studying algorithmic amplification and distortion, and exploring ways to minimize harmful amplifying or distorting effects

Selected Events

-

Optimizing for What? Algorithmic Amplification and Society

A two-day symposium exploring algorithmic amplification and distortion as well as potential interventions

Writings & Appearances

-

-

Deep Dive

We Looked at 78 Election Deepfakes. Political Misinformation Is Not an AI Problem.

Technology isn’t the problem—or the solution.

-

Deep Dive

A Safe Harbor for AI Evaluation and Red Teaming

An argument for legal and technical safe harbors for AI safety and trustworthiness research

-

Deep Dive

Generative AI companies must publish transparency reports

The debate about AI harms is happening in a data vacuum.

-

Essays and Scholarship

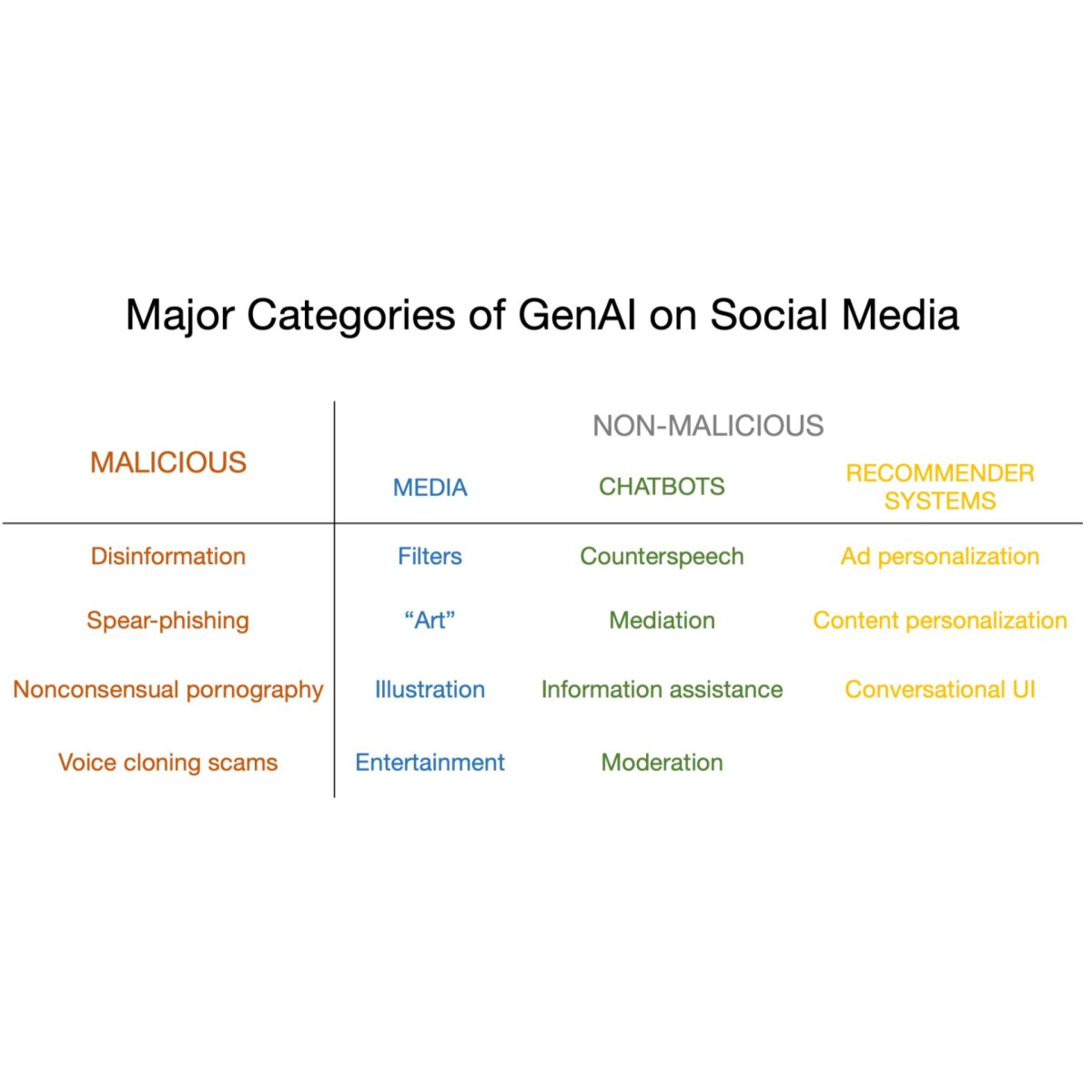

How to Prepare for the Deluge of Generative AI on Social Media

A grounded analysis of the challenges and opportunities